TrueCar L.E.D.

August 2015 ––The Santa Monica Pier is a magical place. The sprawling ocean. The Ferris Wheel. The sights and sounds of the people there. There’s a familiarity to it, a closeness, and this feeling is only magnified during its annual Twilight Concert Series.

This concert series is all about community, and Munkowitz and the team wanted to tap into that. They wanted to bring the space to life through a lighting universe where everything is interconnected, evoking a sense of warmth and tranquility, drawing the entire audience closer together.

The resulting experience is to be driven by the attendees, their participation expressed visually as data in the form of vibrant pulsing lights, creating a unified visual language that permeates through the entire pier.

It’s an immersive, interactive environment that not only will make the attendees feel that they experienced something special, but that they shared something with others, too. Just as TrueCar uses user data to price cars, we use our audience’s data to drive this unique lighting experience.

This concert series is all about community, and Munkowitz and the team wanted to tap into that. They wanted to bring the space to life through a lighting universe where everything is interconnected, evoking a sense of warmth and tranquility, drawing the entire audience closer together.

The resulting experience is to be driven by the attendees, their participation expressed visually as data in the form of vibrant pulsing lights, creating a unified visual language that permeates through the entire pier.

It’s an immersive, interactive environment that not only will make the attendees feel that they experienced something special, but that they shared something with others, too. Just as TrueCar uses user data to price cars, we use our audience’s data to drive this unique lighting experience.

The Case Study

–– 01The Treatment

–– CommunityOur unifying theme is community, imagining vibrant images of fire and light synced with the signature sounds from the concert stage. The experience harkens back to a campfire, where we would stay warm and bonded close together in front of the flame, feeling like we were part of a larger group.

This warming entity would comprise a Lighting Universe which would create one cohesive experience, with every light acting as one pulse, driven by the Centerpiece... The Centerpiece, in turn, is driven by the audience. An attendee interacts with the Centerpiece, which then pulses light and color throughout the entire installation creating a sense of warmth for the pier community.

The Centerpiece is much like a beating heart, with the installation being the body all around it. The heart emanates outwards to all the other lights, setting color themes and pulse states. This will all heighten the overall vibe and feeling for the audience. But to be clear, this is not EDM, with overwhelming sensory overload. Instead, our theme will mimic the concert’s vibe. It will be a chill, warm, pleasant experience that makes you feel like you’re a part of something together.

At its core, this is an audience-driven experience. Every light pulse emanates from the attendees’ data, making it a truly immersive and interactive experience for everyone participating in the Santa Monica Pier Concert Series.

This warming entity would comprise a Lighting Universe which would create one cohesive experience, with every light acting as one pulse, driven by the Centerpiece... The Centerpiece, in turn, is driven by the audience. An attendee interacts with the Centerpiece, which then pulses light and color throughout the entire installation creating a sense of warmth for the pier community.

The Centerpiece is much like a beating heart, with the installation being the body all around it. The heart emanates outwards to all the other lights, setting color themes and pulse states. This will all heighten the overall vibe and feeling for the audience. But to be clear, this is not EDM, with overwhelming sensory overload. Instead, our theme will mimic the concert’s vibe. It will be a chill, warm, pleasant experience that makes you feel like you’re a part of something together.

At its core, this is an audience-driven experience. Every light pulse emanates from the attendees’ data, making it a truly immersive and interactive experience for everyone participating in the Santa Monica Pier Concert Series.

–– 02

The Centerpiece

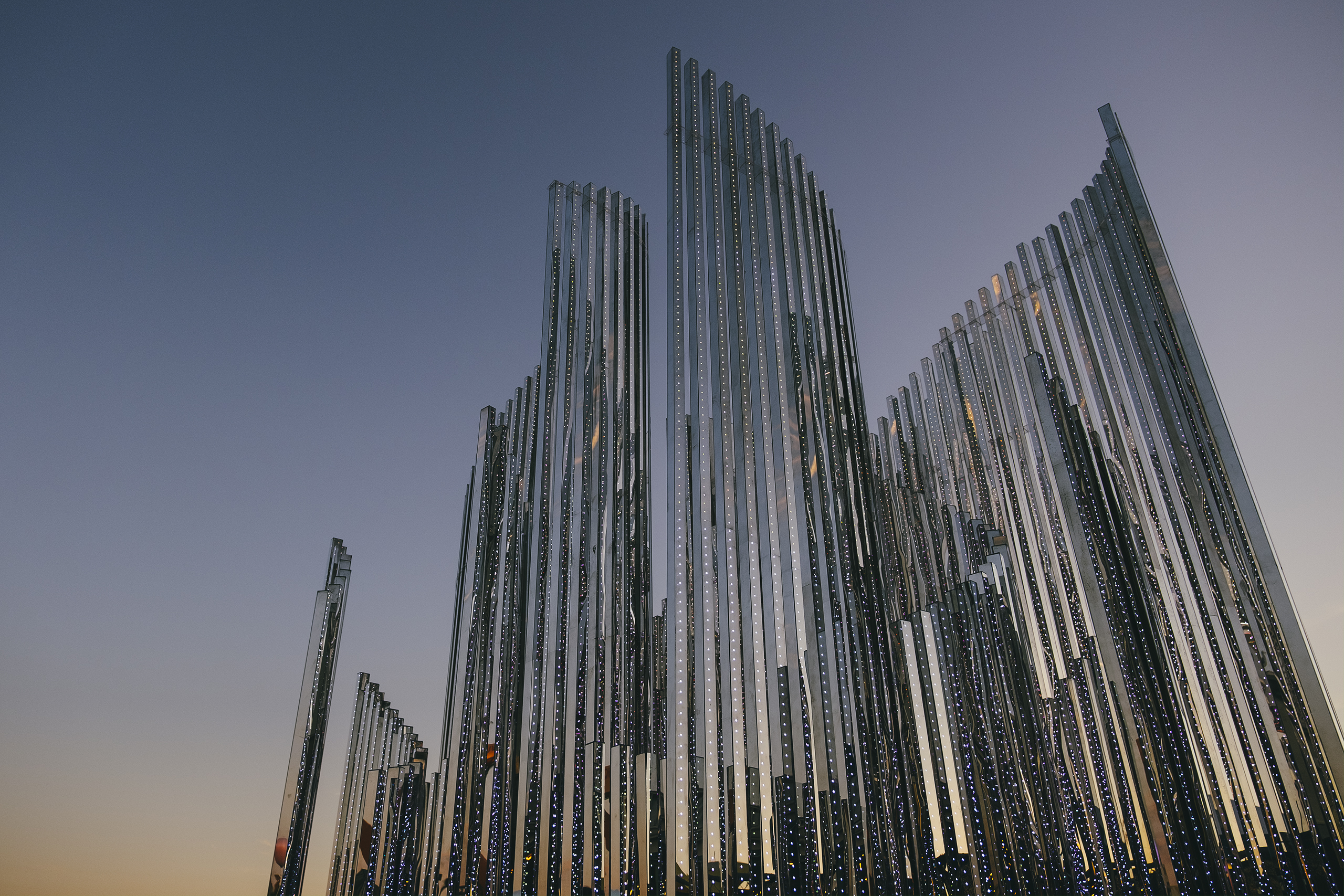

–– 03The Centerpiece is the beating heart of the entire experience. It’s the beacon, the torch and heat source. It’s a volumetric LED sculpture that seamlessly interacts with the audience’s input. Their data will first drive the base of the Centerpiece, then the light will permeate upwards through the sculpture, like smoke dissipating through a chimney, before sending a pulse outward, affecting the entire installation. It will all be very gentle and elegant, supplementing the serene and calming feeling of the concert and its music.

There were several options for designing the Centerpiece’s shape, but the team was especially drawn to it being sculpted and informed by the repeating, iterative shapes of Classical Church Organs. The team was drawn to their volumetric curvature and repeating spires, and also their geometric assemblages, all of which would be sculptural qualities that could house thousands of LEDs within its elegant form.

The team then decided to coat the entire sculpture in security mirrors, so it would reflect the sky and community around it and become much more embedded and integrated into its surrounding environment. This mirrored surface, along with its interiors of over 60,000 addressable LEDs made the centerpiece a thrilling and intensely captivating sculptural piece..

The most important thing was that the Centerpiece felt inviting and would beckon people explore its interactive capabilities. These interaction models were varied, allowing people to use gesture control to draw light within the form. It also took inputs from the sound Stage and visualized the Audio in a tightly reactive fashion, turning the sculpture into a giant audio visualizer that was tightly integrated with the concert. Perhaps most importantly, the Centerpiece could analyze sentiment from text messages and uploaded photos and display that sentiment in the sculpture as light and color, and directly connect further with the concert audience at the pier.

There were several options for designing the Centerpiece’s shape, but the team was especially drawn to it being sculpted and informed by the repeating, iterative shapes of Classical Church Organs. The team was drawn to their volumetric curvature and repeating spires, and also their geometric assemblages, all of which would be sculptural qualities that could house thousands of LEDs within its elegant form.

The team then decided to coat the entire sculpture in security mirrors, so it would reflect the sky and community around it and become much more embedded and integrated into its surrounding environment. This mirrored surface, along with its interiors of over 60,000 addressable LEDs made the centerpiece a thrilling and intensely captivating sculptural piece..

The most important thing was that the Centerpiece felt inviting and would beckon people explore its interactive capabilities. These interaction models were varied, allowing people to use gesture control to draw light within the form. It also took inputs from the sound Stage and visualized the Audio in a tightly reactive fashion, turning the sculpture into a giant audio visualizer that was tightly integrated with the concert. Perhaps most importantly, the Centerpiece could analyze sentiment from text messages and uploaded photos and display that sentiment in the sculpture as light and color, and directly connect further with the concert audience at the pier.

The Inputs

–– 04Sentiment Analysis is an exciting field of development which combines text analysis, linguistics, and language processing to determine the “attitude” or “opinion” of a piece of media. This is already used in Google’s new Predictive API, movie review sites, Twitter tweet analysis during political campaigns, and many other applications.

Visitors to the pier were invited to engage with the environment by communicating via a web socket through a custom-designed web app. It would then upload to the affective SDK and then we would get integer numbers back. Once received, our sentiment analysis process will extract the “intention” of the message and score it in a range of polarity from positive to negative. This data is then pushed to the physical sculpture, which will visually present it in the form of color and motion. As described previously, the sentiment then travels upwards through sculpture and through the rest of the “universe” of the pier.

The photo submissions would drive multiple different aspects of the installation, color, speed or reaction, and size of reaction, Each one would give us multiple integer values via the web app that would then be interpreted into data that controlled the reaction of the response on the physical sculpture. The data itself was read using Affectivas SDK and then via a live web socket connection that data would be streamed directly to the media servers running the installation.

For the Gesture Control, the team used Laser scanners, which allowed us to track up to 37 points of multi-touch input per side of the sculpture. For example, using 4 sides of the sculpture we can utilize up to 148 points around it. The scanners would live in small housings on the ground and emit a vertical and horizontal wave field that can cover a space of roughly 25 feet wide by 20 feet tall. It’s an instantaneous reaction, with little to no delay at all so the gesture control feels fluid and completely natural.

For the Audio Control we were able to create a live interactive 3D object to control the animation of the sculpture. We had multiple settings and animations for how the audio would drive the reaction of the sculpture. We took a left and right channel audio feed direction from the concerts main mix and then fed it into a audio interface where we were able to parse all the frequencies of the audio analysis and apply them to reactions to our live generated 3D object, Whether its the size of the object, color, the center of the reaction or the exterior of the reaction. the audio analysis would dive a truly organic growth experience.

Visitors to the pier were invited to engage with the environment by communicating via a web socket through a custom-designed web app. It would then upload to the affective SDK and then we would get integer numbers back. Once received, our sentiment analysis process will extract the “intention” of the message and score it in a range of polarity from positive to negative. This data is then pushed to the physical sculpture, which will visually present it in the form of color and motion. As described previously, the sentiment then travels upwards through sculpture and through the rest of the “universe” of the pier.

The photo submissions would drive multiple different aspects of the installation, color, speed or reaction, and size of reaction, Each one would give us multiple integer values via the web app that would then be interpreted into data that controlled the reaction of the response on the physical sculpture. The data itself was read using Affectivas SDK and then via a live web socket connection that data would be streamed directly to the media servers running the installation.

For the Gesture Control, the team used Laser scanners, which allowed us to track up to 37 points of multi-touch input per side of the sculpture. For example, using 4 sides of the sculpture we can utilize up to 148 points around it. The scanners would live in small housings on the ground and emit a vertical and horizontal wave field that can cover a space of roughly 25 feet wide by 20 feet tall. It’s an instantaneous reaction, with little to no delay at all so the gesture control feels fluid and completely natural.

For the Audio Control we were able to create a live interactive 3D object to control the animation of the sculpture. We had multiple settings and animations for how the audio would drive the reaction of the sculpture. We took a left and right channel audio feed direction from the concerts main mix and then fed it into a audio interface where we were able to parse all the frequencies of the audio analysis and apply them to reactions to our live generated 3D object, Whether its the size of the object, color, the center of the reaction or the exterior of the reaction. the audio analysis would dive a truly organic growth experience.

The Entrance & Perimeter

–– 05We envisioned tall, cylindrical LED Towers at the entrance, beckoning the patrons to enter the experience. The mere sight of these towers would tell the concertgoers that this is something new, something exciting - a truly unique experience awaits all those who walk through the illuminated gates. We thought of it like a forest of evergreen trees, but made up of LED lights, all controlled by the Centerpiece.

In addition to the LED Towers, we placed 15 inflatable sphere-shaped lanterns around the perimeter of the concert area, to enclose the region and create a sacred space of light and color. These lanterns were most visible from a distance and allowed the team to also infuse some TrueCar branding elements into their arrangement.

In addition to the LED Towers, we placed 15 inflatable sphere-shaped lanterns around the perimeter of the concert area, to enclose the region and create a sacred space of light and color. These lanterns were most visible from a distance and allowed the team to also infuse some TrueCar branding elements into their arrangement.

TRUECAR L.E.D. Credits

Client: Truecar

Agency: Tiny Rebellion

Creative Directors: Jennifer Parke, Justin Smith, Alicia Benz

Producers: Stephen Fahlsing, Daryll Merchant, Peter McKinney

Account: Tyler Bell, Billy Derham, Jennifer Engleman, Brynn Wagner

Digital Production Company: Tool of North America

Director: GMUNK

Executive Producers: Chris Neff, Dustin Callif

Senior Producer: Jennifer Baker

Production Design: VT Pro Design

Executive Producer: Vartan Tchekmedyian

Creative Director: Michael Fullman

Design Director: Conor Grebel

Centerpiece Designer: Conor Grebel

Centerpiece Touch Designer Artist: Matthew Wachtner

Centerpiece Content Animators: Conor Grebel, Jordan Ariel, Akiko Yamashita, Ali Benter

System Design/Engineering: Harry Souders

Crew Chief/Install lead: Nico Yernazian, Hayk Khanjian

Post Production Company: Digital Twigs

Executive Producer: Mark Cramer

Cinematographer: Andrew Tomayko

Photographer: Bradley G Munkowitz

Editor: Andrew Tomayko

Composer: Keith Ruggiero (SoundsRed)

Audio Mix: Keith Ruggiero (SoundsRed)

Client: Truecar

Agency: Tiny Rebellion

Creative Directors: Jennifer Parke, Justin Smith, Alicia Benz

Producers: Stephen Fahlsing, Daryll Merchant, Peter McKinney

Account: Tyler Bell, Billy Derham, Jennifer Engleman, Brynn Wagner

Digital Production Company: Tool of North America

Director: GMUNK

Executive Producers: Chris Neff, Dustin Callif

Senior Producer: Jennifer Baker

Production Design: VT Pro Design

Executive Producer: Vartan Tchekmedyian

Creative Director: Michael Fullman

Design Director: Conor Grebel

Centerpiece Designer: Conor Grebel

Centerpiece Touch Designer Artist: Matthew Wachtner

Centerpiece Content Animators: Conor Grebel, Jordan Ariel, Akiko Yamashita, Ali Benter

System Design/Engineering: Harry Souders

Crew Chief/Install lead: Nico Yernazian, Hayk Khanjian

Post Production Company: Digital Twigs

Executive Producer: Mark Cramer

Cinematographer: Andrew Tomayko

Photographer: Bradley G Munkowitz

Editor: Andrew Tomayko

Composer: Keith Ruggiero (SoundsRed)

Audio Mix: Keith Ruggiero (SoundsRed)